Microsoft Copilot for Security is a new tool based on AI, it takes signals from various sources to use the data as additional input and research layer. Microsoft Copilot for Security is integrated into a specialized language model that includes security graph data.

Microsoft Copilot for Security was recently announced and available for customers in the public stage, it is no longer part of the private preview – which means it can be enabled easily in customer tenants – of course; it will cost money to run Microsoft Copilot for Security.

Microsoft Security Copilot is an AI-enabled cybersecurity solution that processes signals and correlates events with multiple sources. Copilot for Security leverages GPT-4, which is developed and trained by OpenAI. On top of the OpenAI layer is the Microsoft security model that includes trillions of daily security signals.

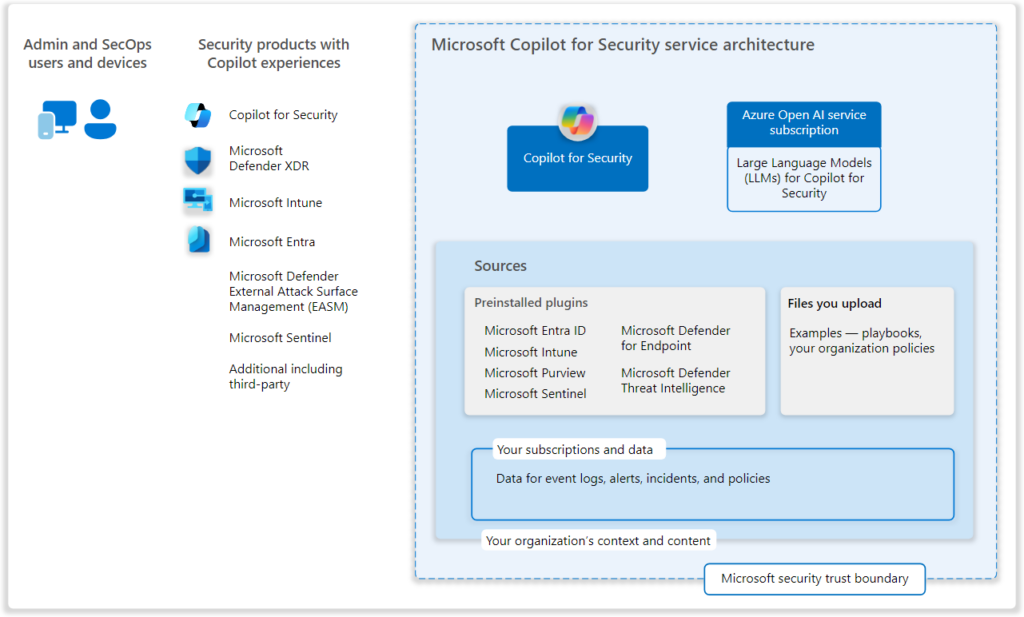

Logical architecture

The following logical architecture shows the sources and service architecture as part of Microsoft Copilot for Security. Good to know, that each Microsoft security product with a copilot experience only provides access to the data set associated with the products. Copilot for Security provides access to all the data sets to which the user has access. When the user has only Defender XDR, it will include access to the Defender XDR data and not Microsoft Entra of Microsoft Intune.

Image source: Microsoft

How does Microsoft Copilot for Security work?

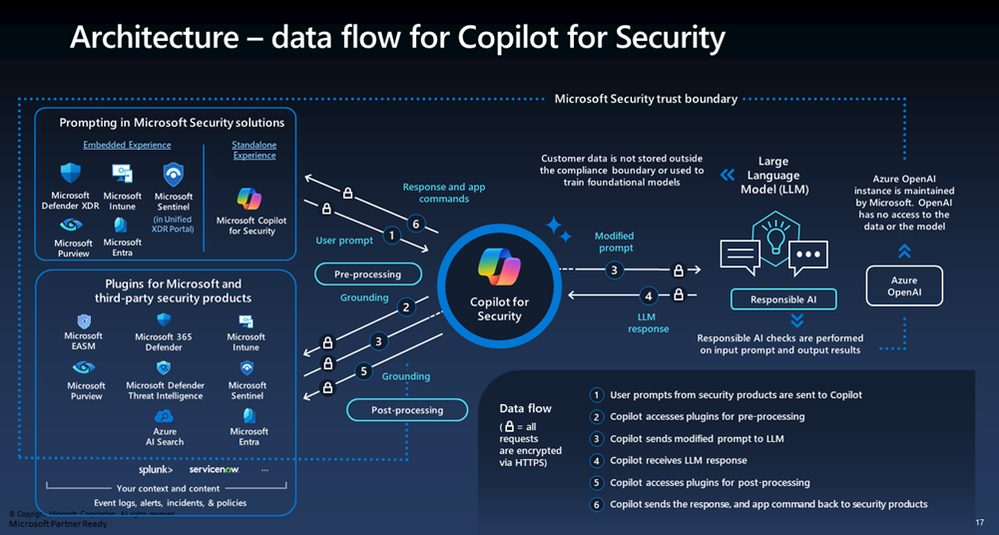

Microsoft Copilot for Security is based on a user prompt. As seen in the above screenshot it works in the following order:

- User prompts from Security products are sent to Security Copilot (Input)

- Security Copilot accesses Plugings for pre-processing

- Security Copilot sends a modified prompt to LLM

- Security Copilot receives LLM response

- Security Copilot accesses Plugings for post-processing

The Copilot exchanges the user’s plugin to leverage security-specific skills, which include a specific set of cyber skills or threat intelligence data.

Setting up Microsoft Copilot for Security

Microsoft Copilot for Security is Generally Available as of April 1, 2024. Microsoft Copilot for Security is available from the standalone experience or embedded in Defender XDR as part of the integration with security.microsoft.com (Defender XDR).

Prerequisites

First of all; we need some prerequisites before we can enable Microsoft Copilot for Security:

- Azure subscription

- Azure owner or Contributor (part of the resource group level)

Money/ cost

As mentioned, Microsoft Copilot for Security is not free and is based on a specific cost model based on the used resources. Good to know – it is not part of Copilot for Microsoft 365. It is a different service. Copilot for Microsoft 365 charges a fixed monthly fee, whereas Copilot for Security uses consumption-based pricing based on pay-as-you-go based on the usage of a Security Compute Unit (SCU)

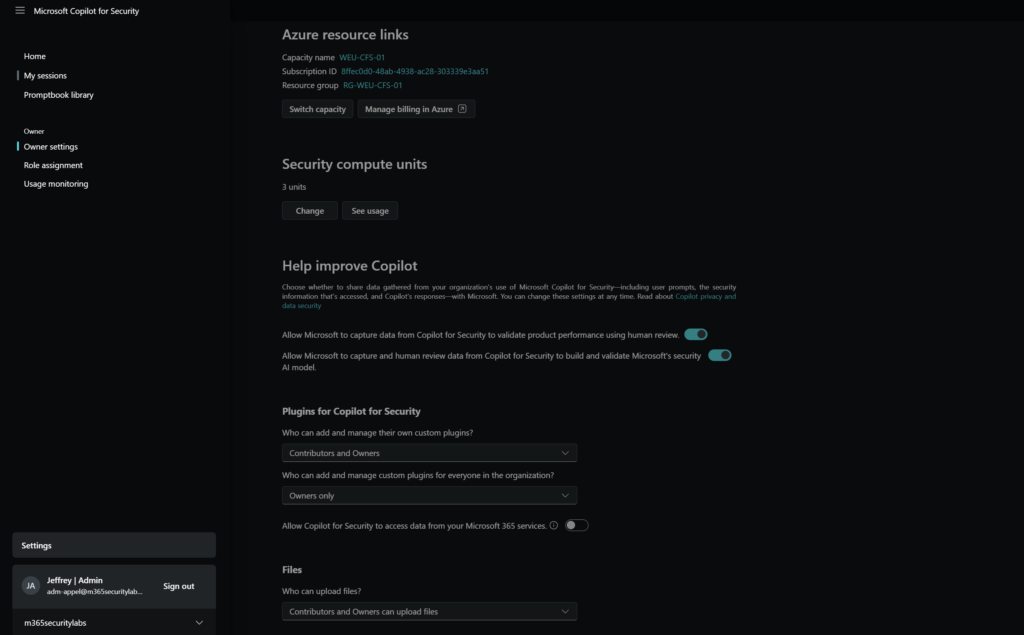

The security compute units are required resources for the consistent performance of Microsoft Copilot for Security. Each security compute unit is billed by hour. You can increase and decrease them at any time. Billing is calculated on an hourly basis with a minimum calculation of one hour. So this means; you will pay a minimum of 1 hour after enabling the Copilot for Security with 1 SCU.

Based on evaluation activities a good start number is at least 3 units. All this depends on the environment – with the performance insights it is completely fine to start with just 1 unit and review the performance data to evaluate the correct count of units. From my experience, 1 unit is too low for the more advanced prompts, for evaluating stuff it is fine. All in production environments the recommendation is to use a minimal count of 3 for simple prompts. When needed you can configure flows via Logic App to auto-size based on the needs and turn the capacity down when Security Copilot is not in use.

The number of SCUs is provisioned on an hourly basis, and the estimated monthly cost is displayed. This means you can always disable SCUs or scale-down turn-off Security CoPilot. The billing is calculated for each hour.

Onboarding of Copilot of Security

The onboarding of Copilot for Security is not difficult and is natively integrated into the securitycopilot.microsoft.com portal. In general, the following steps need to be taken at a level:

- Login to the portal securitycopilot.microsoft.com

- Choose an Azure subscription and Azure Resource Group

- Selecting a geographical location for prompt evaluation, to ensure data remains in the home tenant’s GEO-location

- Configure the number of SCUs

- Agree with the Terms and Conditions

- Assign roles to users and start usage of Copilot for Security

Deploy Copilot for Security

First, we need to onboard Copilot for Security. For creating the allocated resources it is needed to be an Azure subscription owner or contributor to create the capacity. To finalize the onboarding the global administrator/security administrator role is sufficient.

Go to: https://securitycopilot.microsoft.com/ and click on Get Started to set up Microsoft Copilot for the first time:

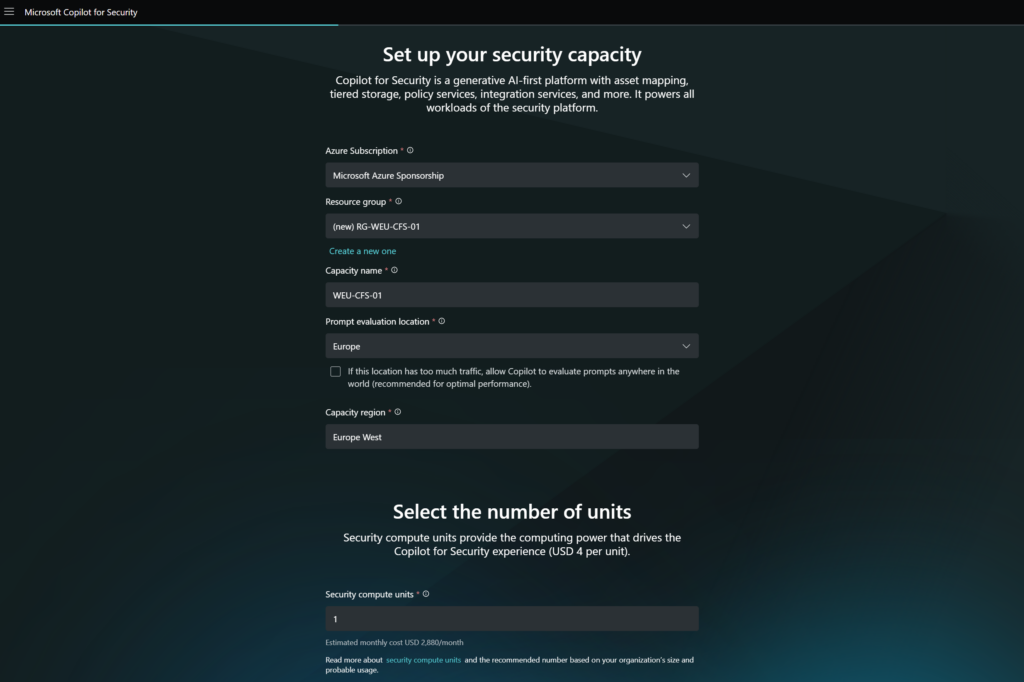

Now we need to set up the security capacity: For this, we need to configure the Azure Subscription/ Resource Group and Capacity name/ GEO. It is recommended to use a dedicated resource group for Microsoft Copilot for Security.

The prompt evaluation location is important – make sure this matches the geo location of the environment and existing products to avoid privacy concerns related to generative AI.

It will take a few minutes to deploy the resources on the backend. 5-10 minutes from my experience.

Security Copilot default environment

The second step is the configuration of the environment. We need a Global Administrator or a Security Administrator role to complete the task for this step. Important is also the permissions on the resource in Azure. Make sure you are an Azure Owner or a contributor where the resource is located (step 1)

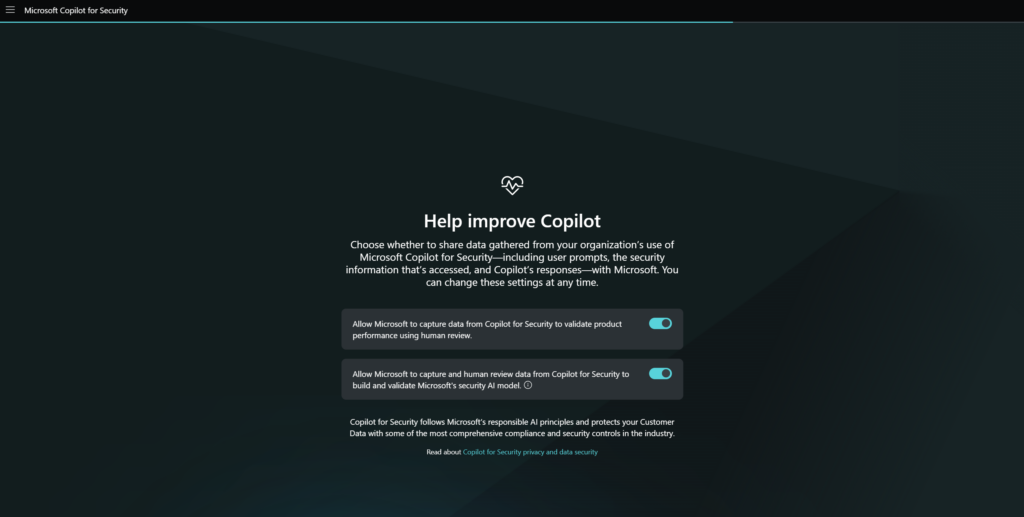

Via https://securitycopilot.microsoft.com/ we can start the capacity usage. the next step is the configuration of the data-sharing options. Data sharing is based on the environment and privacy policy related to AI.

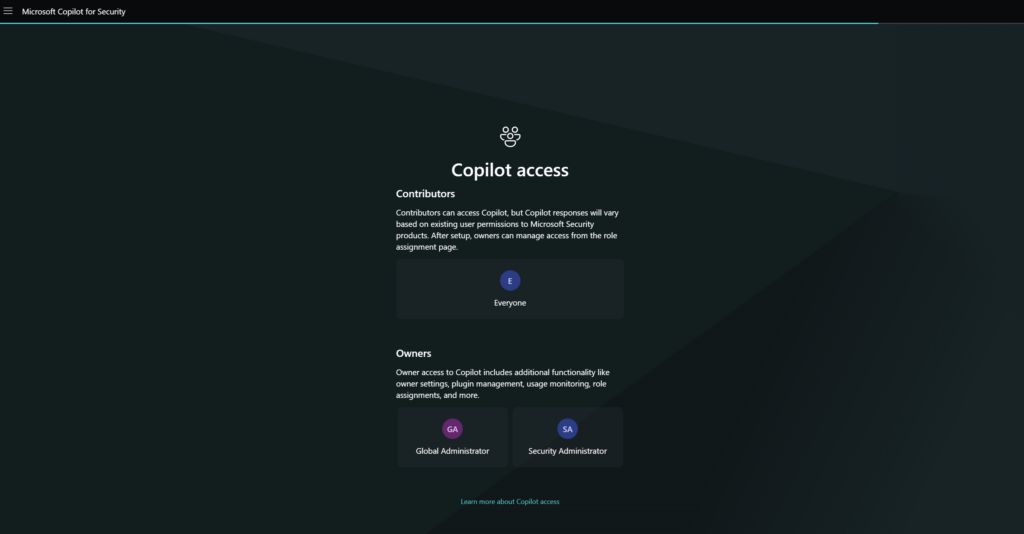

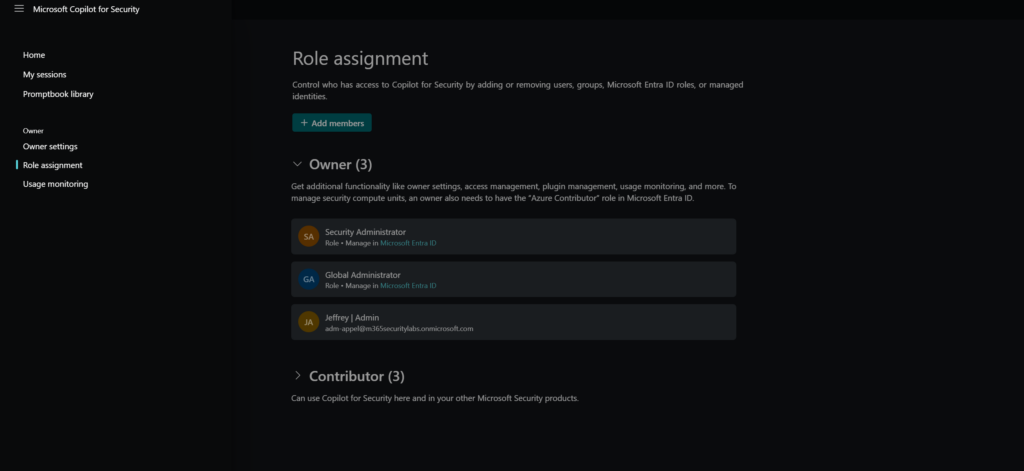

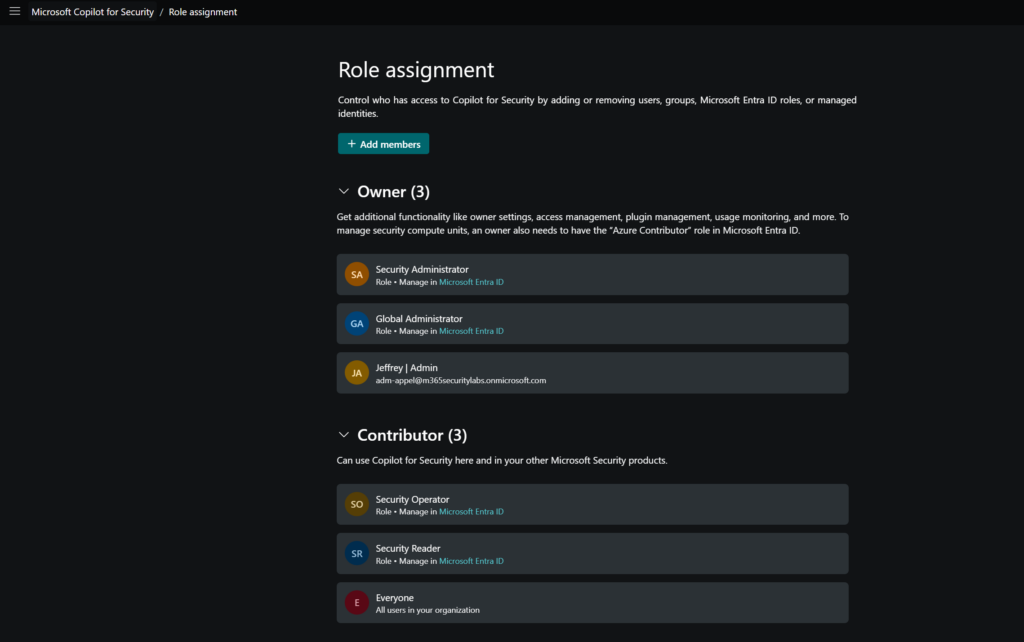

You’ll be informed of the default roles that can access Copilot for Security. By default two roles are generated:

- Contributors (everyone)

- Owners (Global Administrators and Security Administrators)

After the initial set-up, defining the access model from the role assignment page to specify specific roles is possible.

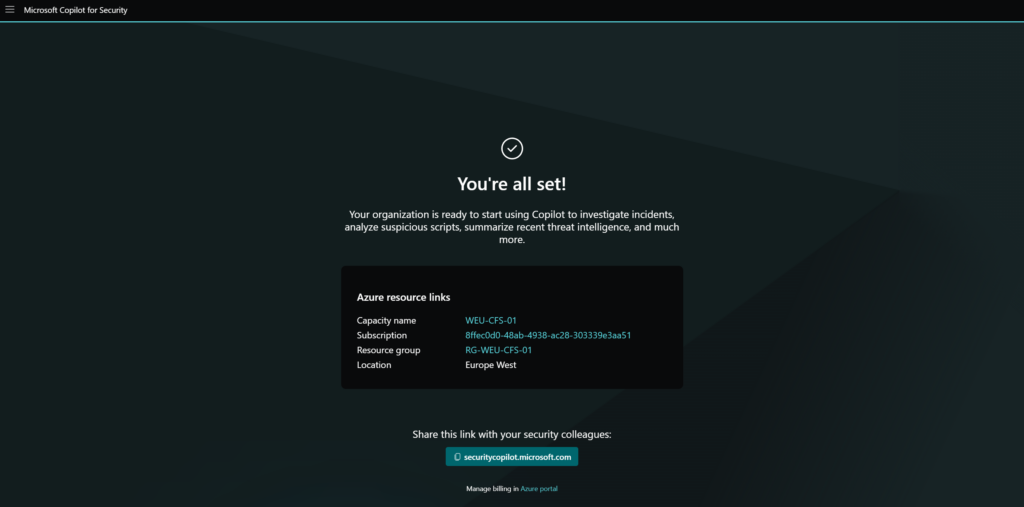

And the Copilot is configured, from now the Security Copilot is billed per hour based on Azure billing.

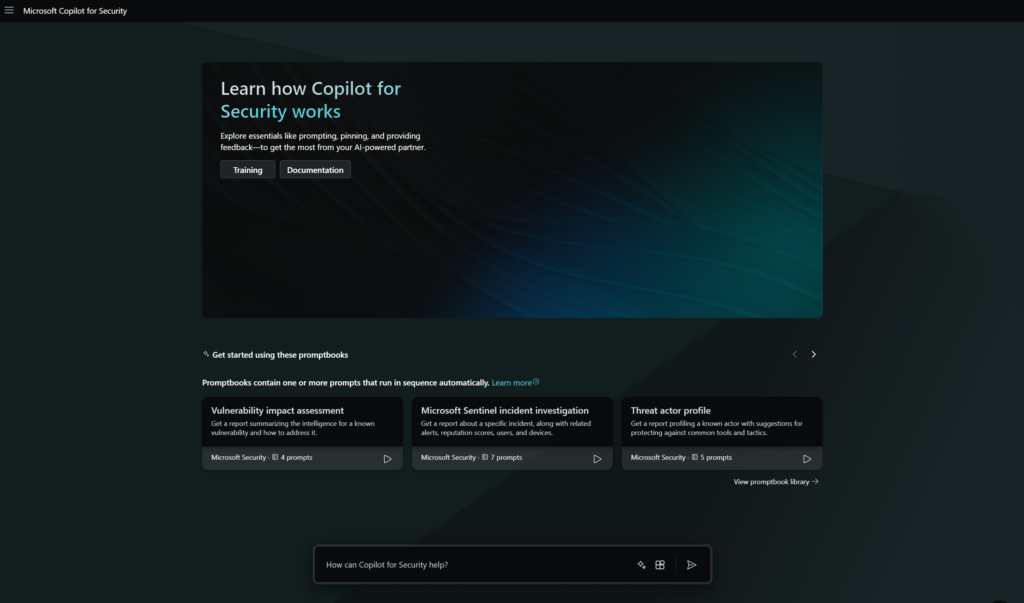

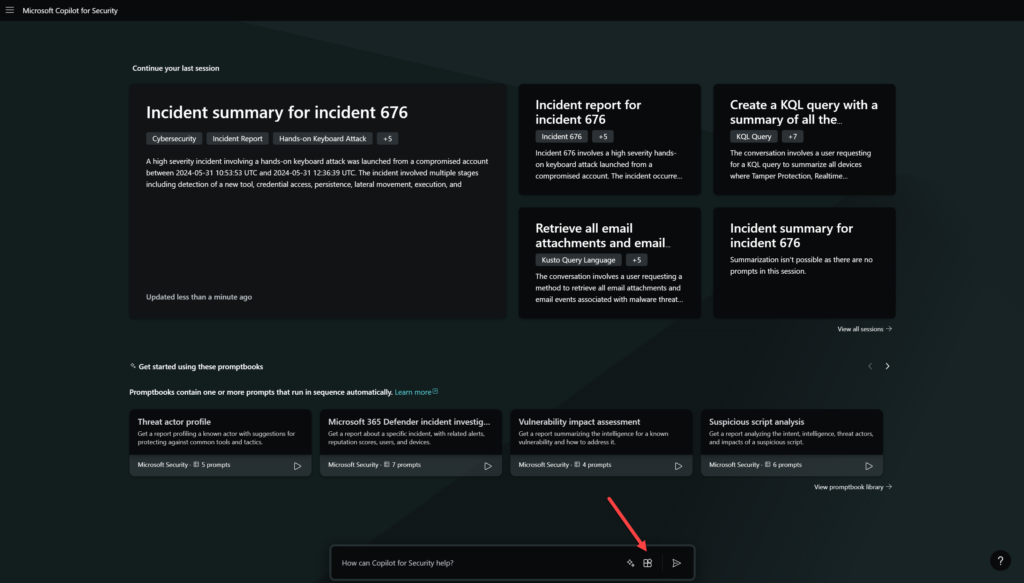

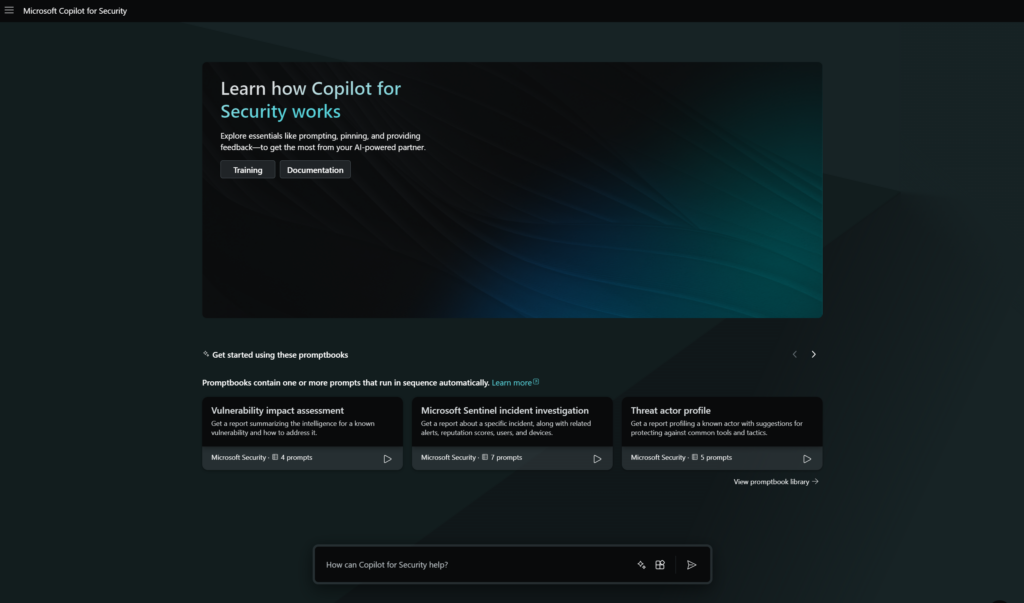

The first start view is the Copilot for Security portal with sample promptbooks and a prompt window.

Permissions

Good to know is the authentication in Copilot for Security. Copilot is running on behalf of authentication. The authentication is based on the access as part of the account. After being authenticated with the Copilot platform, it will use the data available via the signed account.y Your data access determines what plugins are available in prompts and which data can be visible.

By default, all users in the Microsoft Entra tenant are given Copilot contributor access. There are two new roles part of Copilot for Security which are part of Copilot for Security. Good to know the roles are not part of Entra ID, they are part of the IAM in Copilot for Security.

The following roles in Entra are giving automatically Copilot owner access:

- Security Administrator

- Global Administrator

A good example of how the flow works:

- As an analyst, the role of Copilot contributor is assigned, this role gives access to the Copilot platform with the option to create new sessions.

- For the use of plugins and the reach of security data, it is needed to have security roles.

- For the Microsoft Sentinel plugin, you still need a Sentinel Reader role

- For Defender XDR you need to have access via the Unified RBAC to built-in security roles

Via role assignment, it is possible to add more members or groups to the owner or contributor role.

When needed it is possible to remove the Everyone group as part of the contributor and define custom groups to limit the usage of Copilot for Security. (recommended from my point of view)

An important step in the configuration is the Owner settings configuration. In this view, it is possible to configure the restrictions for file upload and the usage of plugins/ and upload of custom plugins. It is recommended to restrict the usage of customer plugins to owners.

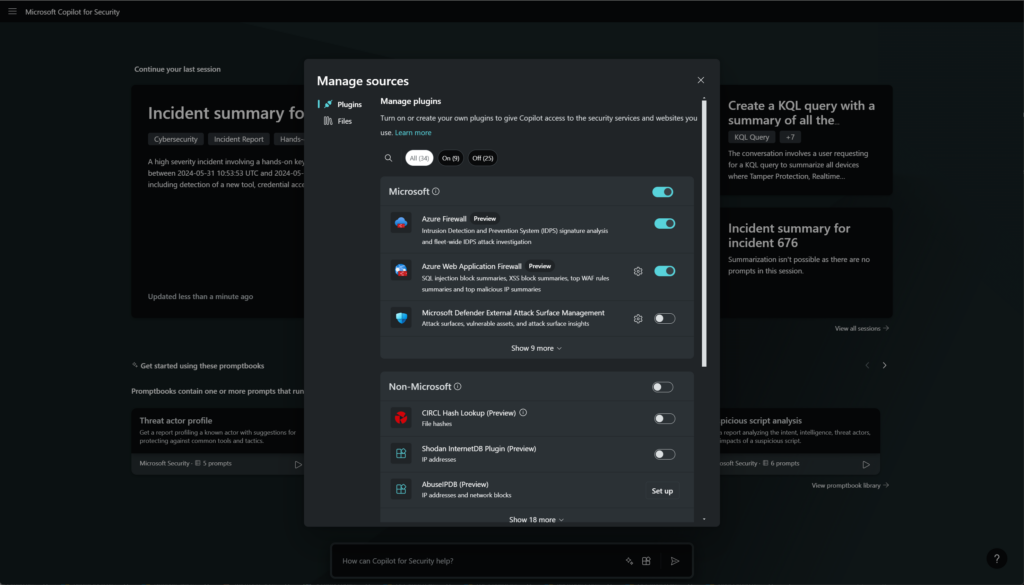

Plugins and policies

After the initial phase, you can define security policies and plugins. Each plugin implements skills and Copilot will evaluate which to use based on the activated plugins. If the plugin is not enabled in Security Copilot it is not possible to enter prompts.

Copilot for Security comes with two different types of plugins:

- Preinstalled Plugins

- Copilot for Security comes with a set of pre-installed plugins that allow it to source information when responding to your prompts. Example (Defender Threat Intelligence/ Azure Firewall/ Defender XDR)

- Custom Plugins

- These extend Microsoft Copilot for Security capabilities by integrating with third-party solutions or adding custom functionality. Example (CyberArik/ Darktrace/ Jamf, Shodan, URLScan)

For configuring plugins click on the small icon:

Now you can turn on and create plugins to give Copilot access to the data. There are two types of plugins (Microsoft and non-Microsoft).

For example, you can connect Attack Surface Management. Good to know – Defender Threat Intelligence is part of the Copilot for Security plan – for this it is not needed to buy a separate Defender Threat Intelligence license/ add-on.

Usage of Copilot for Security

Copilot for Security can be used via two possibilities. The first one is the standalone experience via the securitycopilot.microsoft.com URL. The second option is embedded in the products itself.

Standalone experience:

Available via securitycopilot.microsoft.com

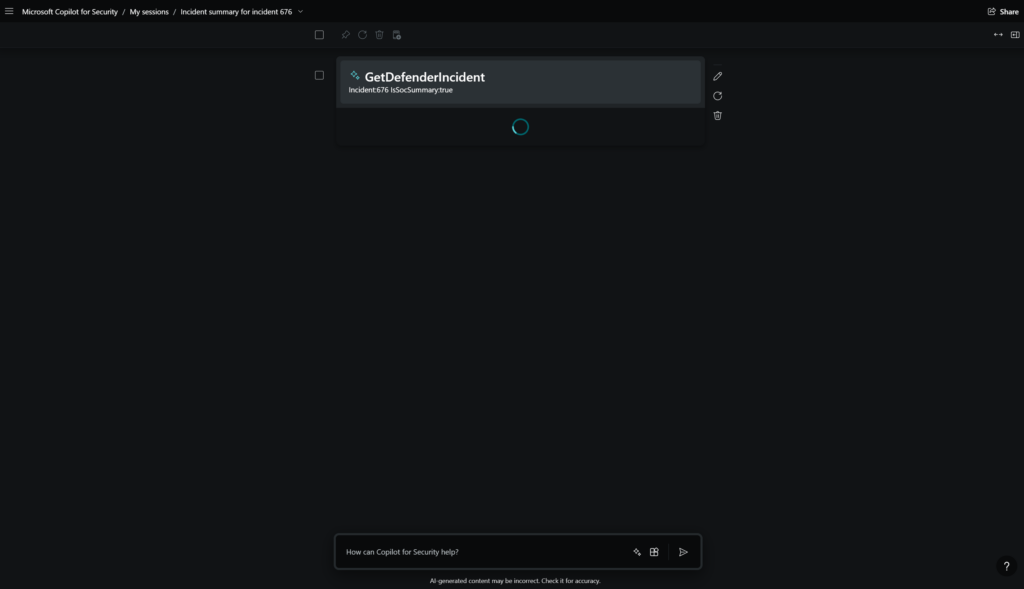

GetDefenderIncident to generate a SocSummary:

Embedded experience:

- Defender XDR

- Microsoft Entra

- Microsoft Intune

- Microsoft Purview

- Microsoft Defender Threat Intelligence

- Microsoft Defender for Cloud

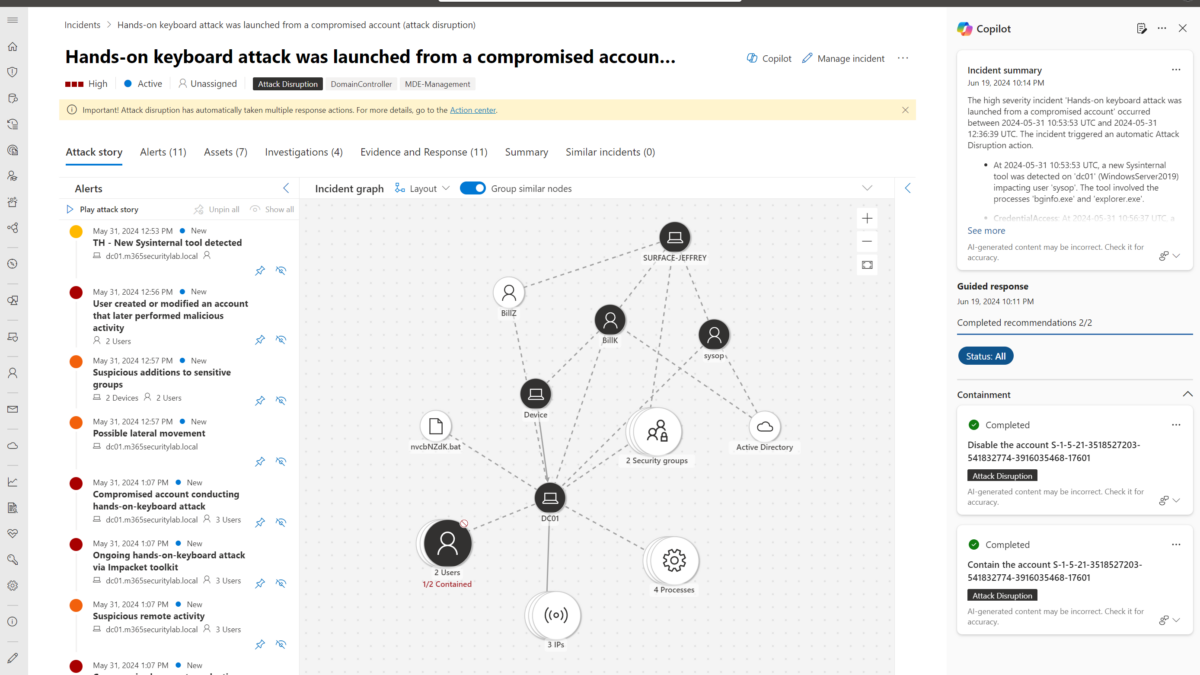

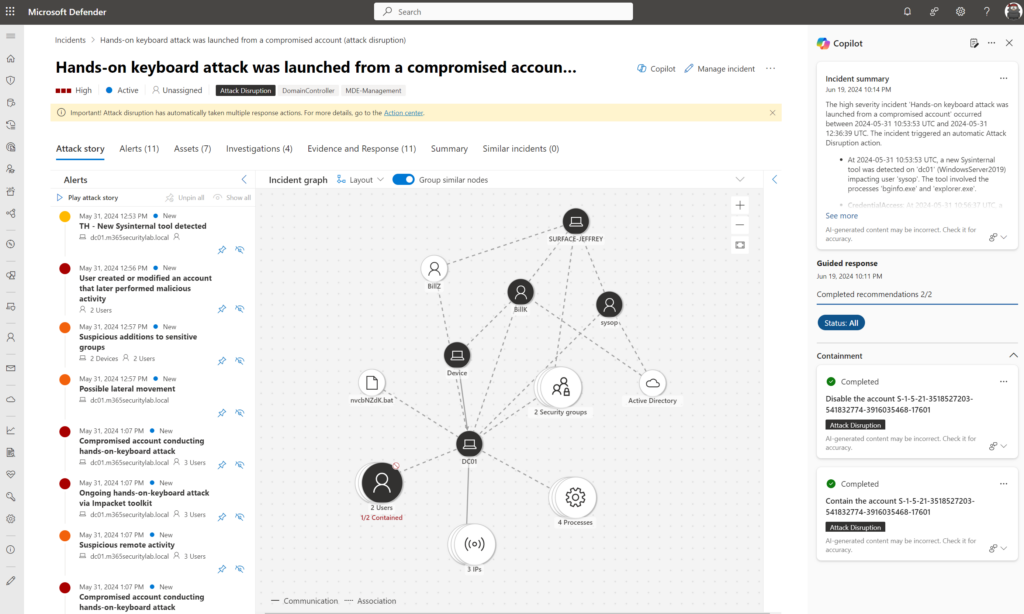

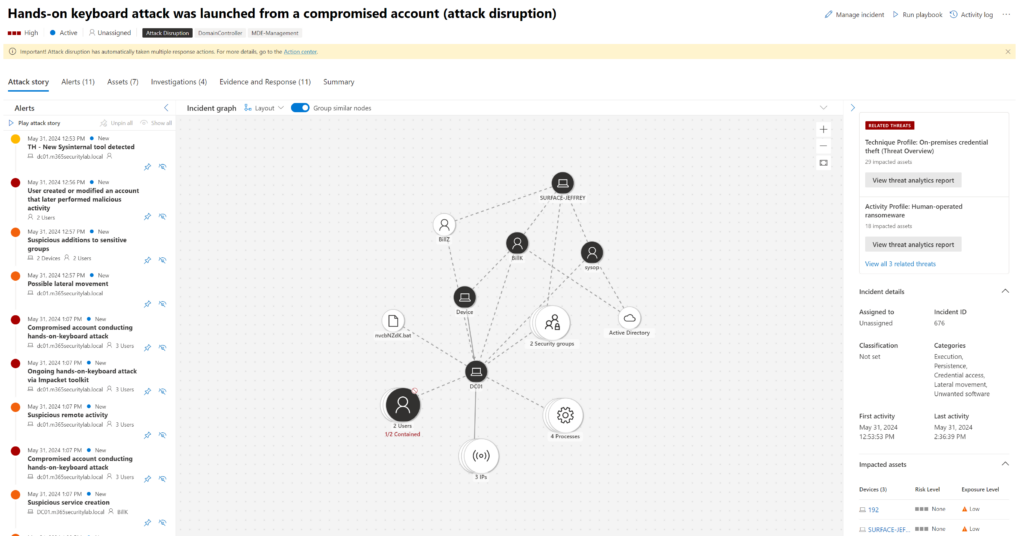

Embedded experience in Defender XDR from the hands-on keyboard attack (attack disruption) incident page, will generate a summary and include all details. This is based on the following simulation: Automatic attack disruption in Microsoft Defender XDR and containing users during Human-operated Attacks

Good to know – for generating this summary 1 unit was not enough, it was working after the switch to 3 units per hour. As already mentioned – 1 unit is only for basic prompts and commands. For advantaged multi-staged alerts – you need more units. From my experience, 3 units are also really limited for the more advanced investigations.

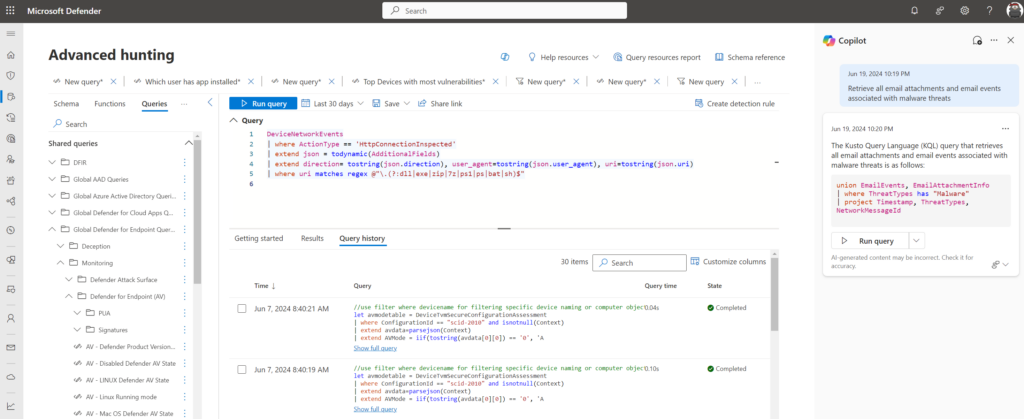

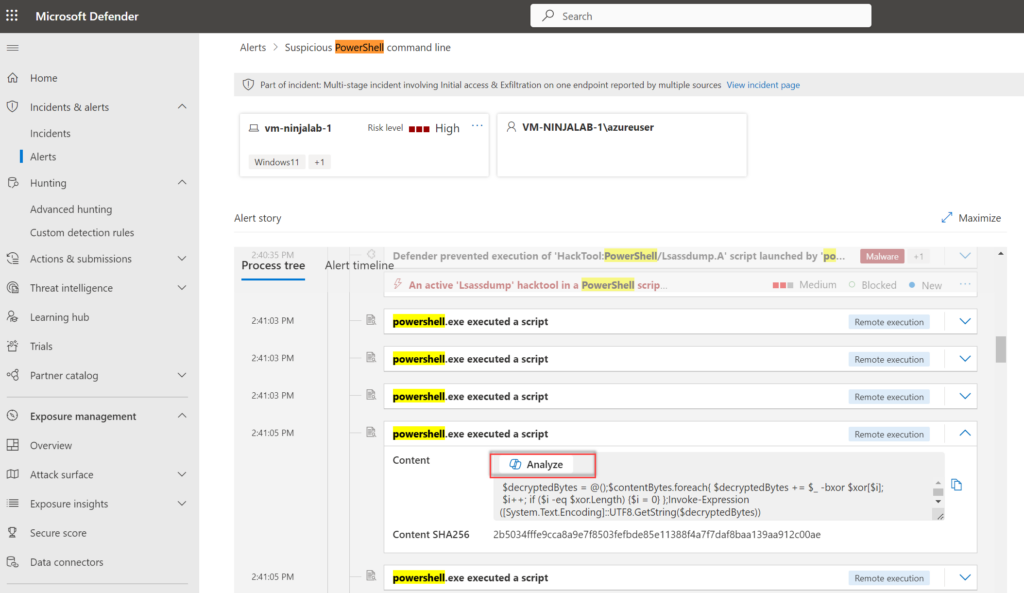

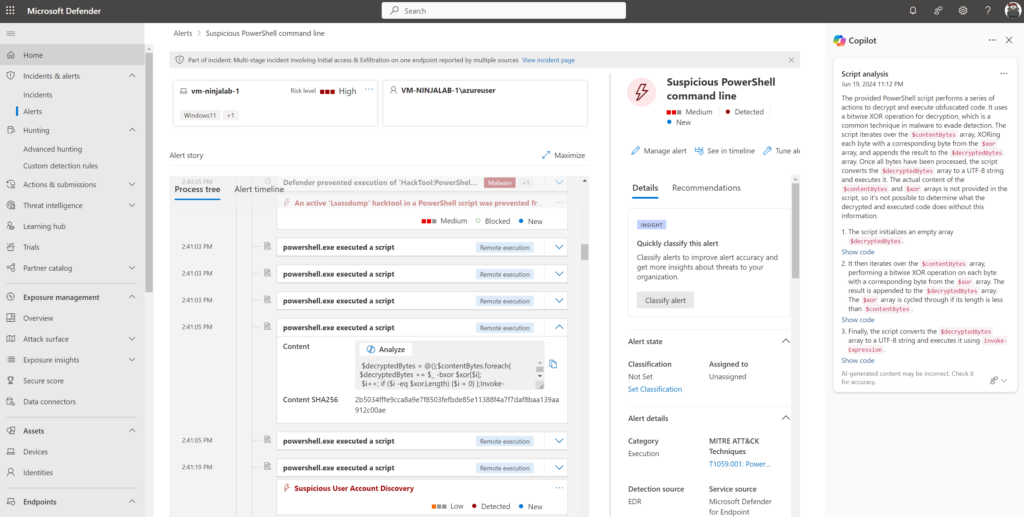

Examples of usage in Defender XDR embedded experience:

- Summarize incidents

- Analyze scripts and codes

- Generate KQL queries for hunting

- Use guided response

- Create incident reports

- Summarize device information

- Analyze files

Experience in Defender XDR for generating Advanced Hunting queries:

Experience in the Alert view for investigating PowerShell content:

Copilot for Security will extract and analyze the PowerShell script. base64 is transferred to known language in readable language:

More information on the usage and integrations available in the embedded experiences: Microsoft Copilot for Security experiences | Microsoft Learn

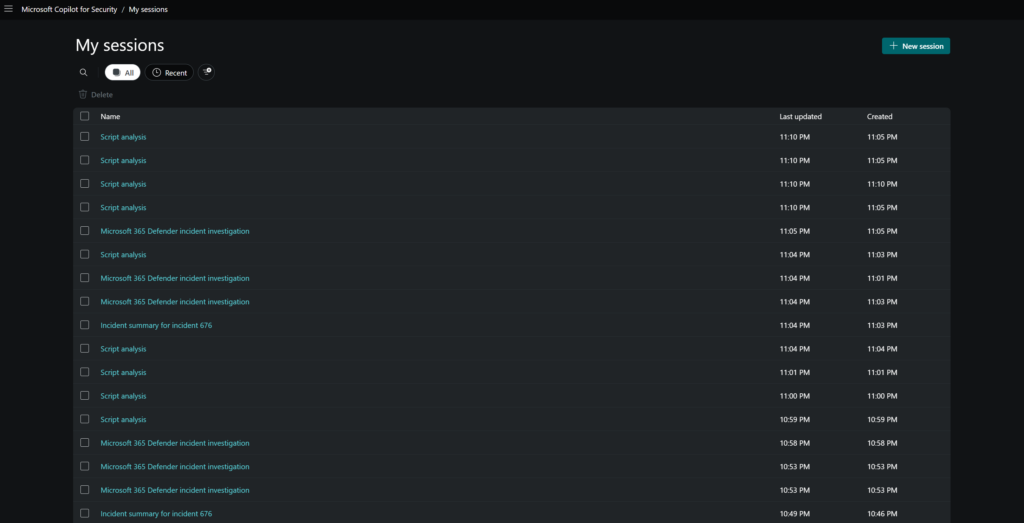

All performed prompts/ sessions will be visible in the My Session view in the standalone experience:

Promptbooks

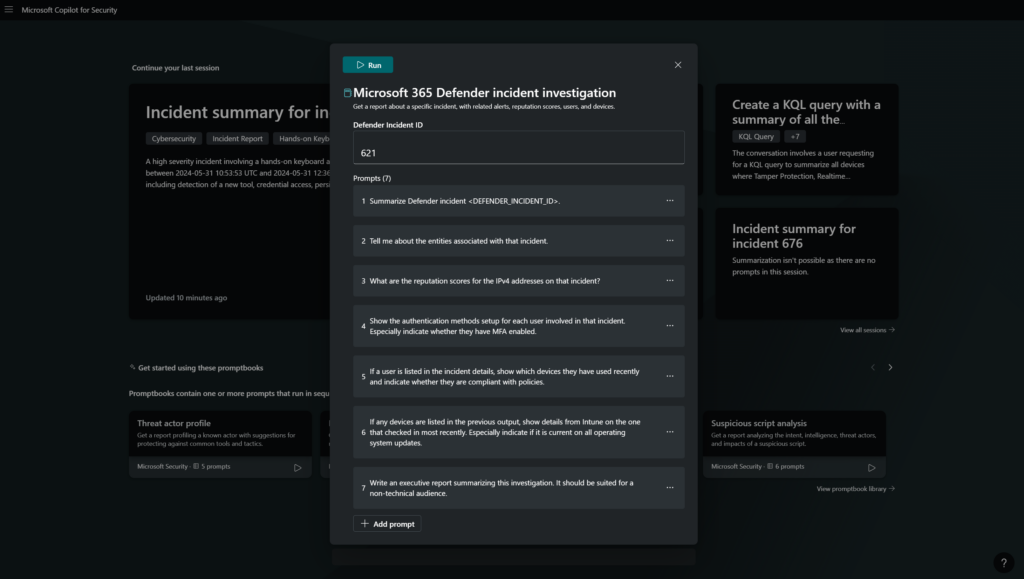

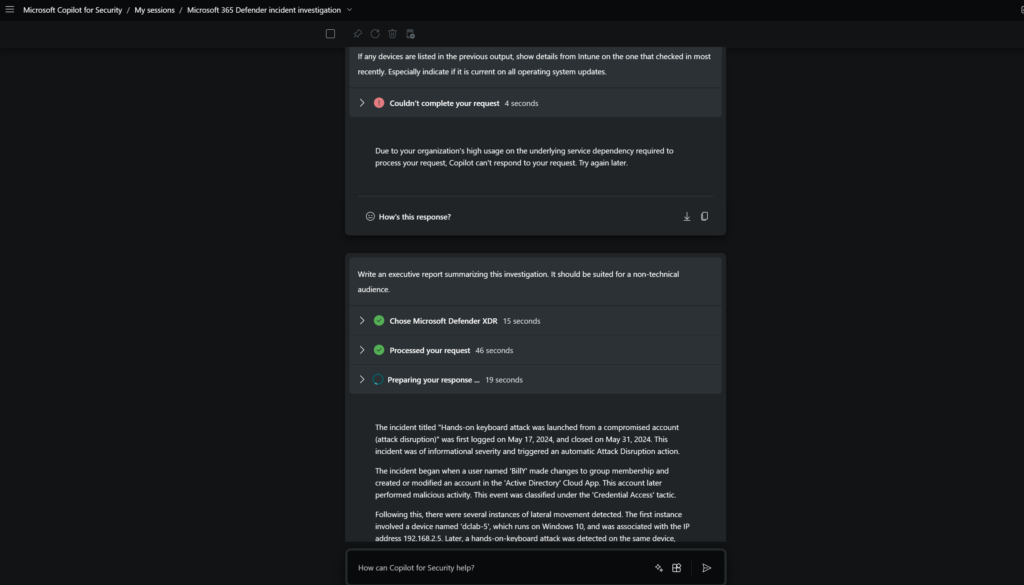

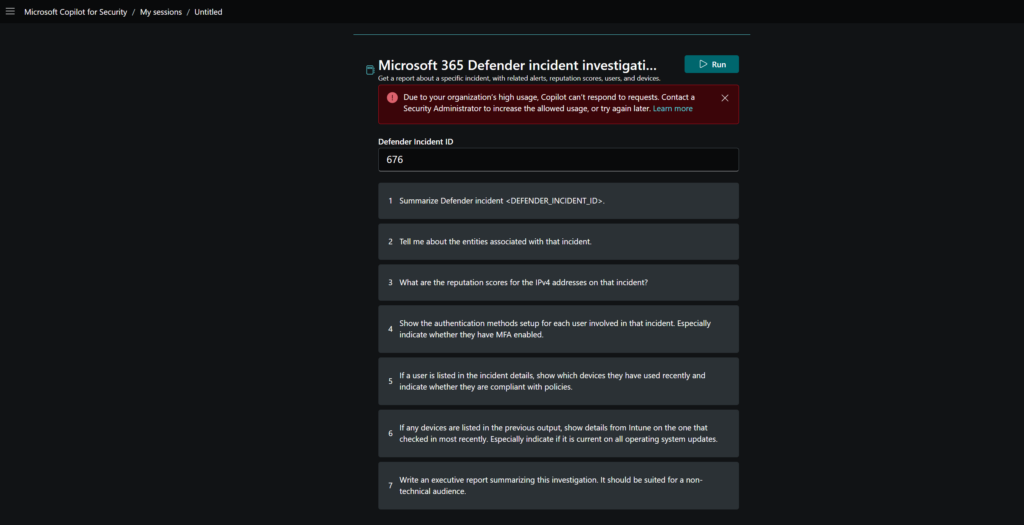

With the use of promptbooks, it is possible to multiple prompts. For example; Microsoft 365 Defender incident investigation will run the following steps:

- Summarize Defender incident

- Tell me about the entities associated with that incident

- What are the reputation scores for the IPv4 address on that incident

- Show the authentication methods setup for each user involved in that incident

- If a user is listed in the incident details. , show which device they have used recently and indicate whether they are compliant with policies

- If any devices are listed in the previous output, show details from Intune on the one that checked in most recently. Especially indicate if it is current on all operating system updates

- Write an executive report summarizing this investigation. It should be suited for a non-technical audience

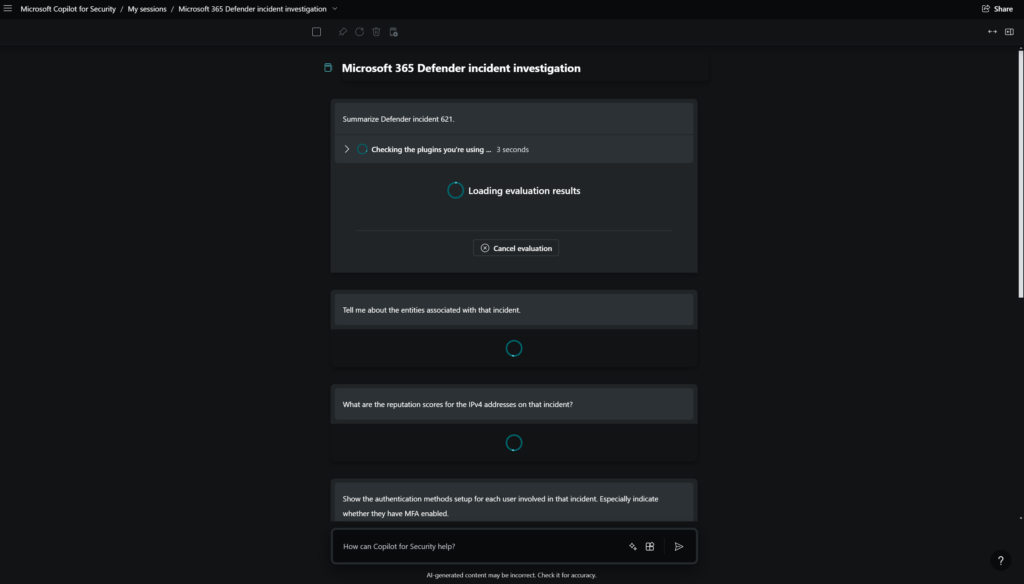

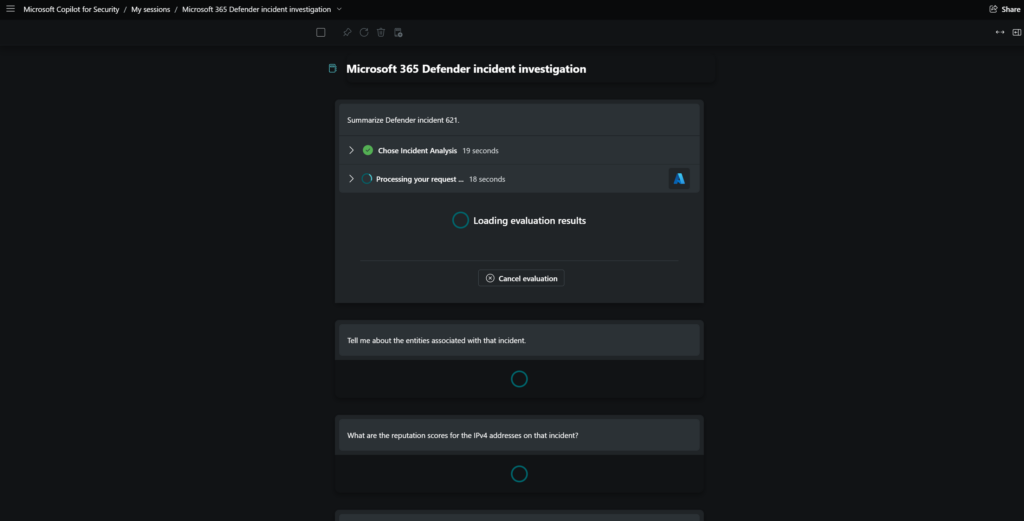

First, it will check the plugins needed for the promptbook

After the initial plugin connection – it will start the prompts. This will take some time in case the incident includes multiple alert evidence and data.

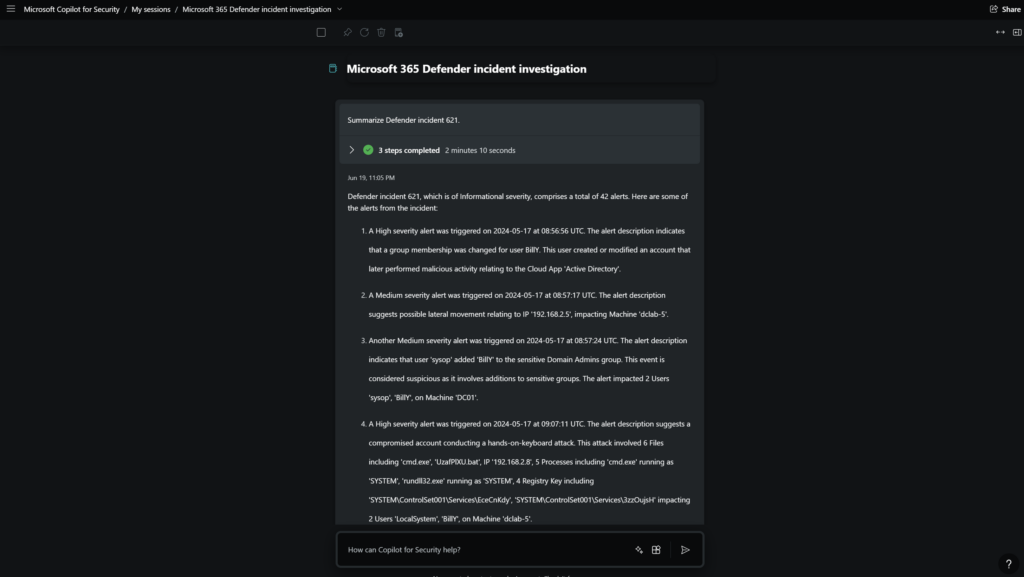

The incident includes 42 alerts and is based on human-operated ransomware including attack disruption.

To give an example of the alert summarize the context: (attack disruption via hands-on keyboard attack via compromised account)

Summarize example of the incident

Defender incident 621, which is of Informational severity, comprises a total of 42 alerts. Here are some of the alerts from the incident:

- A High severity alert was triggered on 2024-05-17 at 08:56:56 UTC. The alert description indicates that a group membership was changed for user BillY. This user created or modified an account that later performed malicious activity relating to the Cloud App ‘Active Directory’.

- A Medium severity alert was triggered on 2024-05-17 at 08:57:17 UTC. The alert description suggests possible lateral movement relating to IP ‘192.168.2.5’, impacting Machine ‘dclab-5’.

- Another Medium severity alert was triggered on 2024-05-17 at 08:57:24 UTC. The alert description indicates that user ‘sysop’ added ‘BillY’ to the sensitive Domain Admins group. This event is considered suspicious as it involves additions to sensitive groups. The alert impacted 2 Users ‘sysop’, ‘BillY’, on Machine ‘DC01’.

- A High severity alert was triggered on 2024-05-17 at 09:07:11 UTC. The alert description suggests a compromised account conducting a hands-on-keyboard attack. This attack involved 6 Files including ‘cmd.exe’, ‘UzafPlXU.bat’, IP ‘192.168.2.8’, 5 Processes including ‘cmd.exe’ running as ‘SYSTEM’, ‘rundll32.exe’ running as ‘SYSTEM’, 4 Registry Key including ‘SYSTEM\ControlSet001\Services\EceCnKdy’, ‘SYSTEM\ControlSet001\Services\3zzOujsH’ impacting 2 Users ‘LocalSystem’, ‘BillY’, on Machine ‘dclab-5’.

- A High severity alert was triggered on 2024-05-17 at 09:07:39 UTC. The alert description indicates a process memory dump involving 5 Processes including ‘cmd.exe’ running as ‘SYSTEM’, ‘services.exe’ running as ‘SYSTEM’ impacting User ‘BillY’, on Machine ‘dclab-5’.

- Another High severity alert was triggered on 2024-05-17 at 09:07:39 UTC. The alert description suggests a compromised account conducting a hands-on-keyboard attack. This attack involved 4 Files including ‘tYKVfiZg.bat’, ‘DrDBYWRM.bat’, IP ‘192.168.2.8’, 2 Processes ‘lsass.exe’ running as ‘SYSTEM’, ‘services.exe’ running as ‘SYSTEM’, 4 Registry Key including ‘SYSTEM\ControlSet001\Services\XbUYzFTU’, ‘SYSTEM\ControlSet001\Services\LyTIxexv’ impacting 2 Users ‘LocalSystem’, ‘BillY’, on Machine ‘dc01’.

- An Informational severity alert was triggered on 2024-05-17 at 09:17:18 UTC. The alert description indicates that lateral movement using RDP was blocked impacting User ‘billy’, on Machine ‘dc01’.

- Another Informational severity alert was triggered on 2024-05-17 at 09:18:39 UTC. The alert description suggests that lateral movement using remote logon by contained user ‘billy’ was blocked on multiple devices relating to IP ‘192.168.2.7’ impacting User ‘billy’, on Machine ‘dc01’.

In summary, the incident ‘Hands-on keyboard attack was launched from a compromised account (attack disruption)’ occurred between 2024-05-17 08:56:56 UTC and 2024-05-17 09:18:39 UTC. It triggered an automatic Attack Disruption action. The incident involved Credential Access, Lateral Movement, and Persistence tactics. The incident began with user ‘BillY’ modifying an account in the ‘Active Directory’ cloud app that later performed malicious activity. This was followed by possible lateral movement detected on the device ‘dclab-5’ (Windows10) involving the IP 192.168.2.5. User ‘sysop’ added ‘BillY’ to the sensitive ‘Domain Admins’ group on the machine ‘DC01’, indicating suspicious additions to sensitive groups. A hands-on-keyboard attack was detected on ‘dclab-5’ (Windows10) involving the ‘cmd.exe’ and ‘rundll32.exe’ processes running as ‘SYSTEM’, and impacting users ‘LocalSystem’ and ‘BillY’. Finally, lateral movement using remote logon by the contained user ‘billy’ was blocked on multiple devices, including ‘dc01’ (WindowsServer2019), involving the IP 192.168.2.7

Prompt: Write an executive report summarizing this investigation. It should be suited for a non-technical audience.

Summarize example for non-technical audience

The incident titled “Hands-on keyboard attack was launched from a compromised account (attack disruption)” was first logged on May 17, 2024, and closed on May 31, 2024. This incident was of informational severity and triggered an automatic Attack Disruption action.

The incident began when a user named ‘BillY’ made changes to group membership and created or modified an account in the ‘Active Directory’ Cloud App. This account later performed malicious activity. This event was classified under the ‘Credential Access’ tactic.

Following this, there were several instances of lateral movement detected. The first instance involved a device named ‘dclab-5’, which runs on Windows 10, and was associated with the IP address 192.168.2.5. Later, a hands-on-keyboard attack was detected on the same device, involving multiple files and processes, including ‘cmd.exe’ and ‘rundll32.exe’, both running as SYSTEM. The attack also involved the IP 192.168.2.8 and impacted the ‘LocalSystem’ and ‘BillY’ users.

Another user named ‘sysop’ added ‘BillY’ to the sensitive ‘Domain Admins’ group on a machine named ‘DC01’. This event was classified under the ‘Persistence’ tactic as it indicated suspicious additions to sensitive groups.

Further lateral movement was detected on a device named ‘dc01’, which runs on Windows Server 2019. This involved multiple files, processes, and registry keys, and impacted the ‘LocalSystem’ and ‘BillY’ users. Later, lateral movement using RDP was blocked on the same device, impacting a user named ‘billy’. Finally, lateral movement using remote logon by the contained user was blocked on multiple devices involving IP 192.168.2.7, impacting user ‘billy’ on ‘dc01’.

In response to the incident, an attack disruption action was triggered. This action disabled a potentially compromised user account in Active Directory to prevent it from accessing resources. The same account was contained by the attack disruption. Later on, the containment on the account was released by the attack disruption.

As of now, the incident remains unclassified, and no follow-up actions have been taken yet.

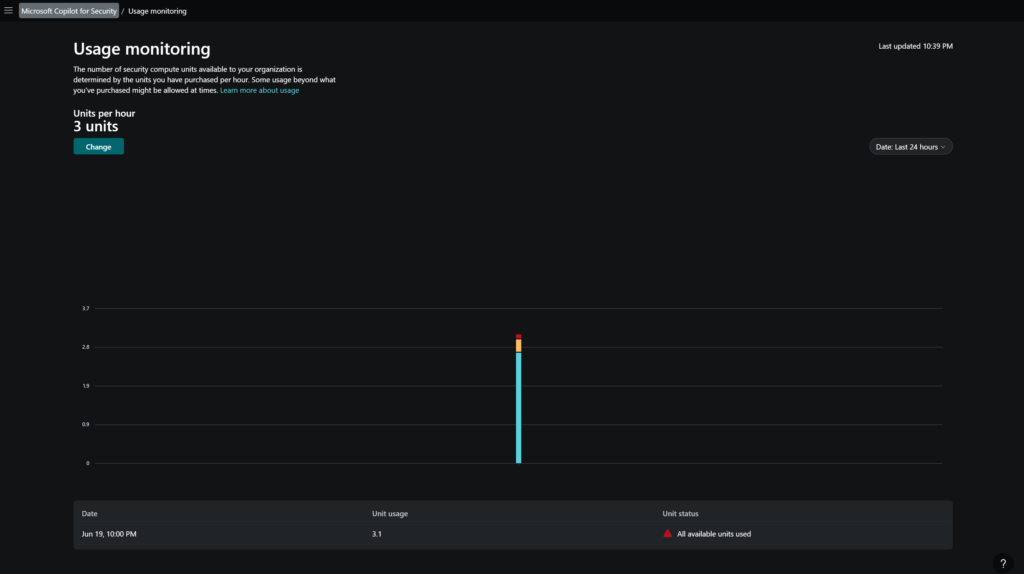

Usage

The usage of Copilot for Security can be configured with the use of usage monitoring. In the monitoring view, it is visible to see how many units have been used in the past hours. In the below screenshot, you can see the usage is 3.1 and all units are used and 3 units are activated.

Prompts are not available to run when there is a high usage. The following message is visible:

“Due to your organization’s high usage, Copilot can’t respond to requests. Contact a Security Administrator to increase the allowed usage, or try again later”

Sources

Tip is the GitHub Copilot for Security community. It includes different sources/ promptbooks/ guides/ logic apps and sample prompts to use. Microsoft Copilot for Security GitHub

Microsoft: How to Become a Microsoft Copilot for Security Ninja: The Complete Level 400 Training

Microsoft: Microsoft Copilot for Security GitHub

Microsoft: MS Learn Doc for Microsoft Copilot for Security